Turing AwardWinner, Chief AI Scientist of Facebook and Hero of France

[Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work.]

This is Part Four of the Plato and Young Icarus series. Part One set out the debate in neoclassical terms between those who would slow down AI and those who would speed it up. Part Two shared the story of the great visionary of AI, Ray Kurzweil. Part Three told the tale of Jensen Huang, the CEO and founder of NVIDIA. Now in Part Four we share the story of Yann LeCun, Turing Award winner, hero of France and Facebook. He will not stop his efforts to fly to the sun of super intelligence and is astonished by his friends and colleagues who are turning back.

Here is the full Plato and Young Icarus series.

- Plato and Young Icarus Were Right: do not heed the frightening shadow talk giving false warnings of superintelligent AI.

- Ray Kurzweil: Google’s prophet of superintelligent AI who will not slow down.

- Jensen Huang’s Life and Company – NVIDIA: building supercomputers today for tomorrow’s AI, his prediction of AGI by 2028 and his thoughts on AI safety, prosperity and new jobs.

- Yann LeCun: Turing Award Winner, Chief AI Scientist of Facebook and Hero of France, Who Will Not Stop Flying to the Sun.

These ideas are illustrated below in neoclassical and comic book styles by Ralph Losey using his Visual Muse GPT.

Yann LeCun

Yann LeCun does not favor slowing down and thinks the fears of AI are misplaced. He is on social media almost daily getting this message out. As a winner of the prestigious Turing Prize for AI in 2018, an NYU Professor and Chief AI Scientist for Facebook AI Research (FAIR)since 2013, Yann LeCun has incredible scientific credentials to go with his distinctive French accent. I for one am persuaded, and so too apparently is his home country of France. On December 6, 2023, Yann LeCun was awarded the Chevalier de la Légion d’Honneur by President Macron at the Élysée Palace.

In addition to leading Facebook’s AI, Yann LeCun is a part time Professor at New York University in various AI research positions. Professor LeCun received his PhD in Computer Science from Université Pierre et Marie Curie (Paris) in 1987. After a postdoc at the University of Toronto, he joined AT&T Bell Laboratories in NJ in 1988 to do early AI research, and joined NYU in 2003 as a professor. Yann was also a director of the Canadian Institute for Advanced Research (CIFAR) program on Neural Computation and Adaptive Perception Program with Yoshua Bengio.

Professor LeCun’s research specializes in machine learning and AI, with applications in computer vision, natural language comprehension, robotics, and computational neuroscience. He is best known for his work in deep learning and contributions to the convolutional network method, which is widely used for image, video and speech recognition. This is illustrated by the next two images, one in classical style, the other in comic book style using Visual Muse and Photoshop.

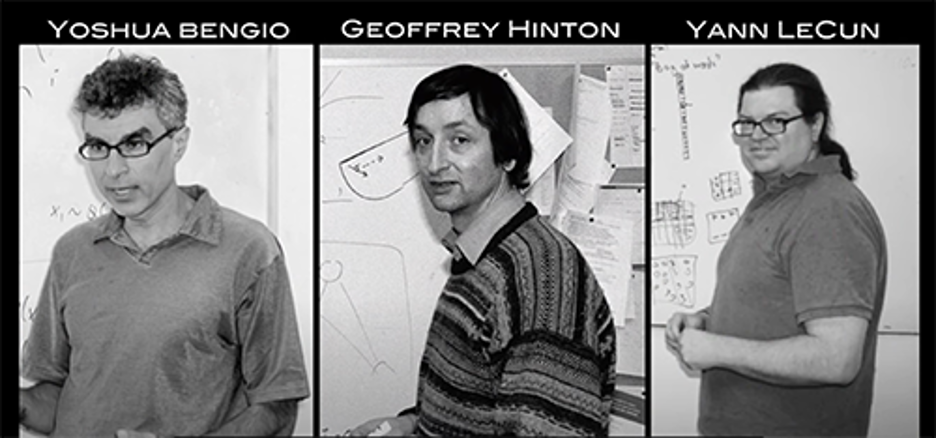

In 2018 Yann LeCun received the Turing Award in AI, considered the Nobel Prize of computing, with fellow CIFAR scientists, Yoshua Bengio and Geoffrey Hinton. The award was for “their for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing.” For more on the background of neural net research and their breakthrough work, see the excellent video by the Association for Computer Machinery (ACM), who actually run the Turing Award, Turing Award 2018: Yoshua Bengio, Yan Lecun and Geoffrey Hinton. These three – Bengio – Hinton – LeCun – have since been referred to as the Godfathers of AI. In the below image from the ACM video you see them as young research scientists. At that time research into neural network AI was considered a dead-end pursuit that would never lead anywhere. In fact, most scientists in the eighties, nineties, and even up to 2005, thought they were crazy to even think AI could be based on the neural network design of the human brain. Their work was not really accepted until 2012 when their approach blew away the competition the Image Net Challenge. ACM Turing Award video at 5:45.

These young scientists were not deterred by the warnings of the establishment Daedaluses. They kept on trying, just like Icarus, and eventually reached the AI we see today. The illustrations that follow are of the young God Fathers generated by Midjourney AI. They are depicted in a neoclassical style and a comic book style. (See if your brain’s pattern recognition abilities can detect who’s who?)

In an unexpected twist of fate, two of the God Fathers who reached the sun have come to fear their heights. As mentioned in Part One of the series, Geoffrey Hinton, by far the oldest and better known of the three, resigned from Google in April 2023, right after ChatGPT4 was released. Google’s Hinton, contrary to Google’s other even more senior AI scientist, Ray Kurzweil, started warning the world of the scary dangers of AI, that AI research should slow down or pause. Yoshua Bengio agrees with Hinton, but for different reasons, and has said that he feels ‘lost’ over his life’s work. Yann LeCun seems to be the only one of the big three happy about what they have done and eager to do more. He respectfully disagrees with both Hinton and Bengio.

In a December 15, 2023, interview of Yann LeCun by CBS, which I highly recommend, he discussed this disagreement with his good friends and colleagues. Click here for this segment on YouTube or click the image below.

Yann LeCun’s Message to Calm Down and Keep Going

The theme of this Plato and Young Icarus series is that Yann LeCun is right, that we should keep going to the sun of super-intelligence, that we should now be cowed by the cave of fearful sci-fi shadows. I do not necessarily share all of the reasoning of LeCun expressed in this short video excerpt. Like Yoshua Bengio I am skeptical of most governments and profit goal based corporations. Unlike Benigo, however, I do not think government regulation is the answer for the same reason. Moreover, I evaluate the existential risks of our current situation on Earth to be greater than Yoshua Bengio apparently does. These present risks from our still, relatively un-enhanced society, justify the small risks of continuing AI fight. As Yan LeCun points out later in the CBS interview, dismissing the bogus self-selecting poll which suggested a 40% belief rate, almost no one in the AI community actually thinks there is a real risk of AI species extermination. Meta’s Chief AI Scientist Yann LeCun talks about the future of artificial intelligence (CBS Mornings, 12/15/23) at 22:50-23:14.

LeCun persuasively argues that risks are lowered as AI becomes smarter. He also provides specific examples. He does this again in a second video to follow by the World Science Festival, where we will show the video excerpt. In this CBS interview LeCun explains that a risk to humanity is simply not possible because of agency is necessarily a part of AI. The AI just acts as our agent and, despite sci-fi to the contrary, we can stop it at any time. This is concisely explained by Yann in the Meta’s Chief AI Scientist at 31:33-31-54 and 33-31-36:28. As Ray Kurzweil puts it, we are the AI. Ray Kurzweil: Google’s prophet of superintelligent AI who will not slow down. This already seems obvious to me now, that AI is a tool we use, not a conscious creature. See e.g., What Is The Difference Between Human Intelligence and Machine Intelligence.

These concepts are depicted by AI in the image below using Visual Muse in three styles: classical, comic book and combination of both.

LeCun does not favor slowing down the research and development of AI. Like Kurzweil, and Jensen Huang, he thinks the fears of AI are misplaced. Jensen Huang’s Life and Company – NVIDIA: building supercomputers today for tomorrow’s AI, his prediction of AGI by 2028 and his thoughts on AI safety, prosperity and new jobs.

Yann LeCun uses social media on a regular basis to try to counter the fear-based, slow-down messages of other scientists, government regulators and most of the news and commentary media. I suspect that Yann has AI help in writing his many tweets and other media messages.

Yann LeCun recently summarized very well his social media message on Twitter (I refuse to call it X), with this tweet:

(0) there will be superhuman AI in the future

LeCun Twitter feed

(1) they will be under our control

(2) they will not dominate us nor kill us

(3) they will mediate all of our interactions with the digital world

(4) hence, they will need to be open platforms so that everyone can contribute to training and tuning them.

Two more tweets on December 17, 2023, provide more detail on his thinking.

Technologies that empower humans by increasing communication, knowledge, and effective intelligence always cause opposition from people, governments, or institutions who want control and fear other humans. The arguments against open source AI today mirror older arguments against social media, the internet, printers, the PC, photocopiers, public education, the printing press,…. Historically, those who have banned or limited access to these things have not been believers in democracy.

The emergence of superhuman AI will not be an event. Progress is going to be progressive. . . . At some point, we will realize that the systems we’ve built are smarter than us in almost all domains. This doesn’t necessarily mean that these systems will have sentience or “consciousness” (whatever you mean by that). But they will be better than us at executing the tasks we set for them. They will be under our control. . . . Language/code are easy because they are discrete domains. Code is particularly easy because the underlying “world” is fully observable and deterministic. The real world is continuous, high-dimensional, partially observable, and non-deterministic: a perfect storm. The ability of animals to deal with the real world is what makes them intrinsically smarter than text-only AI.

Yann LeCun, Tweet One and Tweet one.

The arguments of all sides on this important issue, including LeCun’s, are found in the second half of a two-hour panel discussion at the 2023 World Science Festival. AI: Grappling with a New Kind of Intelligence. Remember, two-sided debates like this are easy to understand, but distortive, and can create a false polarization. As Yann LeCun explained in the CBS video excerpt shown above, there is actually a tremendous amount of things that all experts on AI agree upon, including the great promise of AI. See e.g. How We Can Have AI Progress Without Sacrificing Safety or Democracy written by Yoshua Bengio, and Daniel Privitera (Time, 11/08/23) (“Yes, people have different opinions about AI regulation and yes, there will be serious disagreements. But we should not forget that mostly, we want similar things. And we can have them all: progress, safety, and democratic participation.”) For a better understanding of the go-slow, Daedalus side of this debate, also see e.g. Samuel, The case for slowing down AI (Vox 3/20/23) (well-written news article discussing the potential risks of AI and case for slowing down its progress.)

To conclude the presentation of Yann Lecun’s position, who is the Plato for today’s young AI Icarus engineers, watch these short excerpts from the 2023 World Science Festival video, AI: Grappling with a New Kind of Intelligence. In the first video he provides technical background. Two young Daedalus debaters then join him on stage, and the last two videos show LeCun’s counter-arguments. Click here for this segment on YouTube or click the image below.

For more on the recent breakthroughs by Nvidia on building supercomputers, see Jensen Huang’s Life and Company – NVIDIA: building supercomputers today for tomorrow’s AI. Click here for the next segment on YouTube or click the image below.

Also see the insights of Professor Blake Richard on why we should not fear superintelligent AI, but should fear mediocre AI. The Insights of Neuroscientist Blake Richards and the Terrible Bad Decision of OpenAI to Fire Sam Altman (“The possibility of superintelligence is what makes me more confident that the AIs will eventually cooperate with us. That’s what a superintelligent system would do. What I fear more, funnily enough, are dumb AI systems, AI systems that don’t figure out what’s best for their own survival, but which, instead, make mistakes along the way and do something catastrophic. That, I fear much more.“) Click here for the next segment on YouTube or click the image below.

Yann LeCun here points out that technology progression is the history of the world, and it will be the good guys’ AI against the bad guys’ AI.

Many agree with this argument. See: Judy Lin, Best AI Safety is ensuring good guys innovate much faster: former Foxconn and MIH CTO William Wei (Nov. 7, 2023). This article quotes the impressive Taiwanese engineer and entrepreneur, William Wei. With his extensive experience in California and mainland China, William Wei knows what he is talking about. Here are his own rather colorful words on the situation that echoes LeCun’s position.

In the event of AI regulation is imposed, guess whose innovation will be harnessed, the good guys or the bad guys? Regulation is a double-edged sword, even though the regulators have good intentions to prevent risks, the more likely scenario is that only the good guys listen to them and stop innovating, while the bad guys grab the chance and continue to progress with their purposes. . . .

If regulation is necessary, then human beings should be the subject of such regulation, not AI. Today’s AI is only math; it doesn’t even care if you unplug it. Only human beings would have the motivation to use AI to destroy the enemies or competitors in order to secure their own survival. . . .

Well, we can only hope that the new regulations do not slow down the innovations of the good guys who could have been the people to save humanity. Since we have no control over the bad guys, the best thing we can do is to help the good guys innovate faster than the other side.

Best AI Safety is ensuring good guys innovate much faster: former Foxconn and MIH CTO William Wei.

Stay tuned for the grand finale to this Plato and Young Icarus series where we will summarize and conclude with a call to action. Fly, Icarus fly!

Ralph Losey Copyright 2023 – All Rights Reserved –. Published on edrm.net with permission.

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.